Once the internet has really taken off and the use of technology in almost every activity has become the new norm, people started to voluntarily trade their privacy for convenience. And they have done it for years. Yet, nowadays, individuals are finally thinking about questioning this exchange and paying more attention to their data privacy.

According to a study by Intouch International, 9 in 10 American internet users stated they are concerned about the privacy and security of their personal information online. Moreover, 67% of them are currently advocating for strict national privacy laws.

Considering the advent of artificial intelligence, whose successful application is entirely based on large amounts of data, the need to ensure individuals’ privacy is paramount.

Reading Hannah Fry’s book “Hello World: How to be human in the age of the machine”, it has become clear that the information we are giving away, voluntarily or unintended, eventually end up in large databases. In most cases, these databases are afterwards exploited for any number of uses, including marketing opportunities, purchasing recommendations, credit scoring, etc.

Even though we can argue that individuals technically give their consent by accepting the infamous ‘Terms and Conditions’, most user agreements are too lengthy and we might not even realize what privacy rights we are about to lose.

How exactly is our privacy affected by AI?

SCORING AND RATINGS

We see AI as the largest data collector and interpreter, yet we have never stopped to fully comprehend the meaning of this. The information artificial intelligence analyzes are often used to classify, evaluate and rank individuals. Apart from the fact this is usually done without users’ consent, it can also lead to discrimination, missed opportunities. Take for example the case of Sesame Credit, a citizen scoring system used by the Chinese Government. When the scoring will become mandatory in 2020, it is expected that people with low scores will witness the repercussions in every aspect of their lives. Li Yingyun, the company’s technology director, stated that the details of the complex scoring algorithm are not to be disclosed, however, he did share some insights on how it works. For instance, someone who plays video games a few hours a day is considered less reliable than someone who might be a parent because their shopping behaviour shows they buy diapers frequently.

VOICE AND FACIAL RECOGNITION

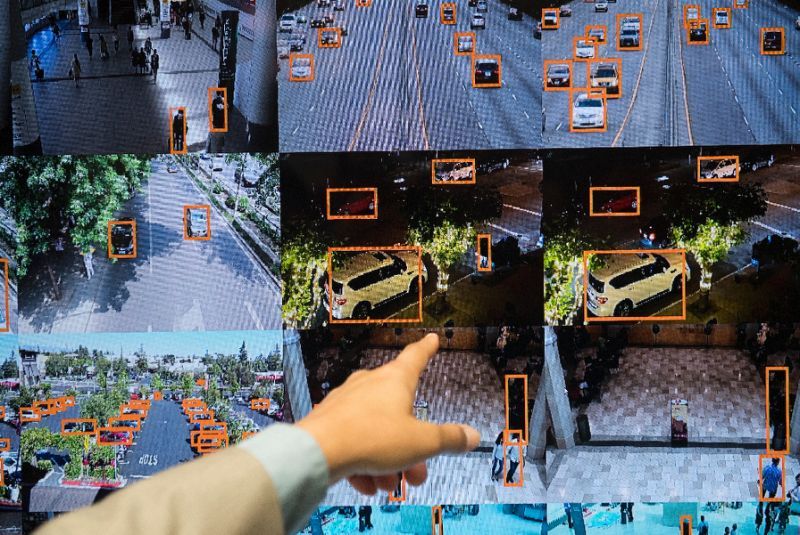

Speech and facial recognition are two methods of identification that AI is becoming increasingly fond of. The downside is that these methods have the potential to severely compromise anonymity in public space. To illustrate, consider the case of a law enforcement agency who uses facial and voice recognition to find individuals, bypassing the legal requirements.

Continue reading on Strongbytes’ blog.